Description

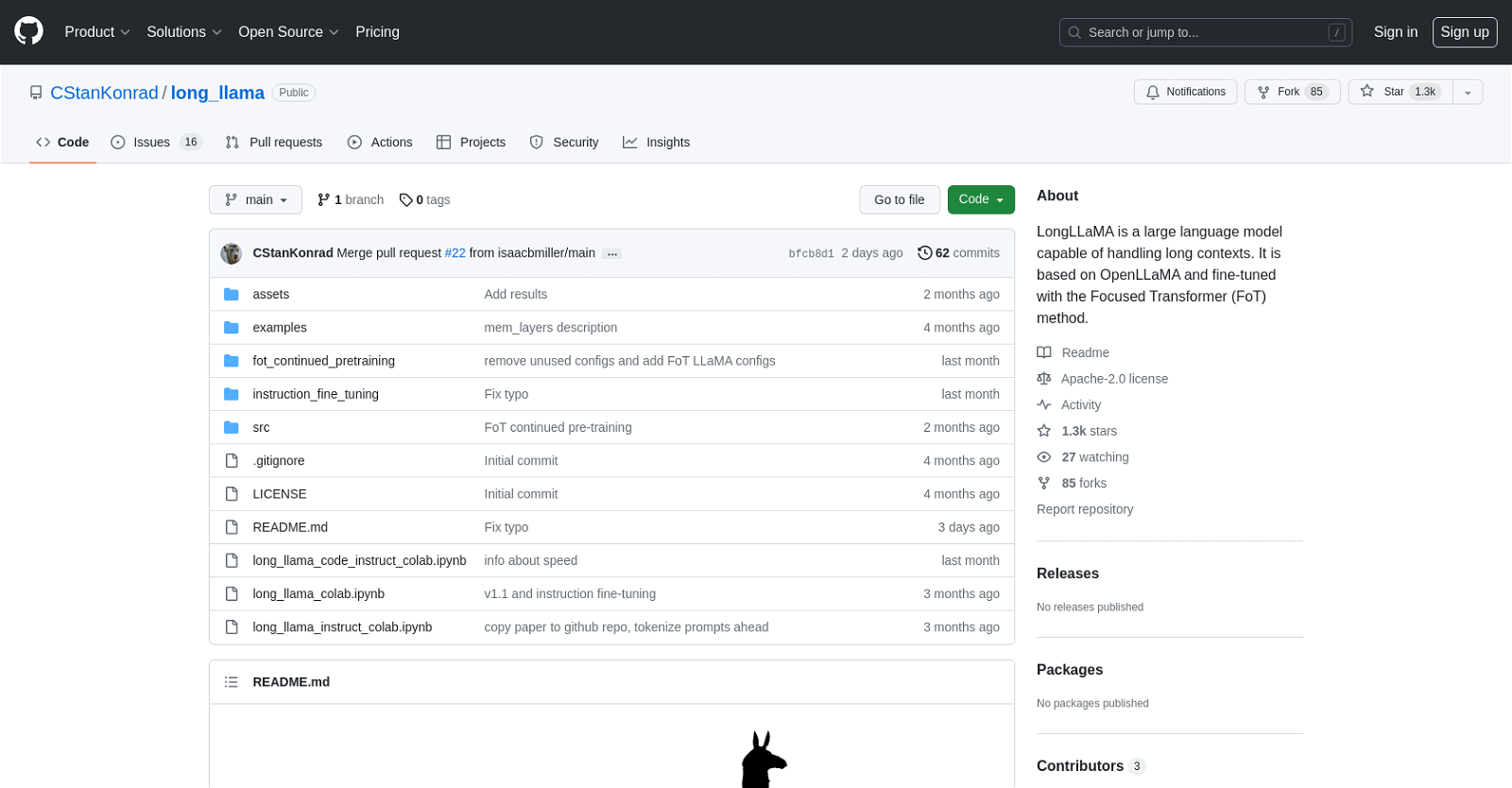

LongLLaMA is a large language model that has been fine-tuned with the Focused Transformer (FoT) method, allowing it to effectively process and understand long contexts. It is based on OpenLLaMA and hosted on GitHub as a public repository created by CStanKonrad.

What is this for?

LongLLaMA is a large language model that can handle long contexts, fine-tuned with the Focused Transformer (FoT) method and based on OpenLLaMA.

Who is this for?

LongLLaMA is for developers and researchers in natural language processing tasks who need a powerful language model for processing and understanding long contexts.

Best Features

- Capable of handling long contexts up to 256k tokens

- Utilizes the FoT method for enhanced focus on specific areas of input text

- Can be leveraged in various applications such as natural language processing, text generation, and sentiment analysis