Description

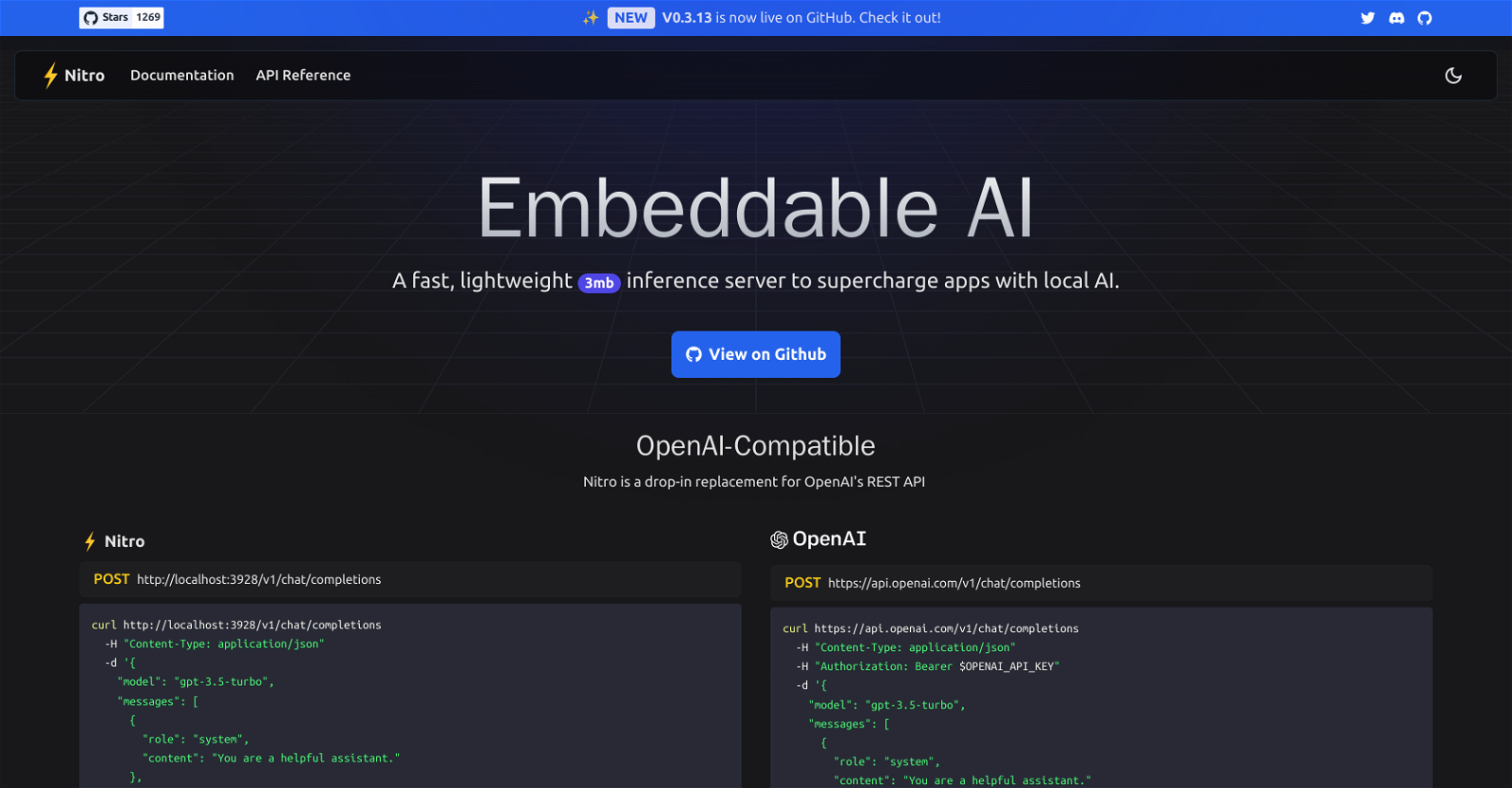

Nitro is a highly efficient C++ inference engine designed for edge computing applications. It is a lightweight and embeddable tool that serves as a fast, lightweight inference server to enhance apps with local AI capabilities. Nitro is fully open-source and showcases compatibility with OpenAI's REST API, making it a drop-in alternative. It offers operational and architectural flexibility, runs on diverse CPU and GPU architectures, and integrates top-tier open-source AI libraries for versatility. Future updates will include AI capabilities such as think, vision, and speech.

What is this for?

Nitro is a highly efficient C++ inference engine primarily developed for edge computing applications. It is a lightweight and embeddable tool designed for product integration.

Who is this for?

Nitro is for app developers seeking to implement local AI functionality efficiently. It is also suitable for those looking for a fast, lightweight inference server to bolster their apps with AI capabilities.

Best Features

- Compatibility with OpenAI's REST API, positioning it as a viable drop-in alternative

- Operational and architectural flexibility to run on diverse CPU and GPU architectures, ensuring cross-platform compatibility

- Integration of top-tier open-source AI libraries for versatility and adaptability